Unveiling The Core Concepts Of Machine Learning Training Ppt

This training module on Unveiling the Core Concepts of Machine Learning offers a detailed understanding of the core concepts and principles of machine learning. This module presents a brief history of ML and comprehensively covers machine learning algorithms such as Supervised Learning Regression, Decision Tree, Random Forest, Classification, Unsupervised Learning Clustering, Association Analysis, Hidden Markov Model, and Reinforcement Learning. It also discusses the importance and steps involved in machine learning. Further, it highlights the advantages and disadvantages of machine learning along with its future. It also includes Activities, Key Takeaways, and Discussion Questions related to the topic to make the training session more interactive. The deck has PPT slides on About Us, Vision, Mission, Goal, 30-60-90 Days Plan, Timeline, Roadmap, Training Completion Certificate, Energizer Activities, and Client Proposal.

You must be logged in to download this presentation.

Impress your

Impress your audience

Editable

of Time

PowerPoint presentation slides

Presenting Training Deck on Unveiling the Core Concepts of Machine Learning. This deck comprises of 117 slides. Each slide is well crafted and designed by our PowerPoint experts. This PPT presentation is thoroughly researched by the experts, and every slide consists of appropriate content. All slides are customizable. You can add or delete the content as per your need. Not just this, you can also make the required changes in the charts and graphs. Download this professionally designed business presentation, add your content, and present it with confidence.

People who downloaded this PowerPoint presentation also viewed the following :

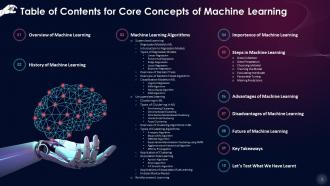

Content of this Powerpoint Presentation

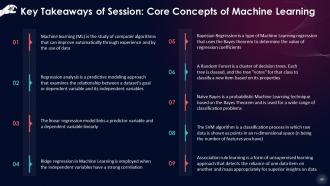

Slide 4

This slide introduces Machine Learning which is the study of computer algorithms that can enhance themselves automatically based on experience and data. It is a component of Artificial Intelligence.

Instructor Notes:

Machine learning algorithms have many applications, such as in medicine, email filtering, speech recognition, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the required tasks.

Slide 5

This slide gives information about the history of ‘Machine Learning’. Arthur Samuel, a pioneer in the fields of computer games and Artificial Intelligence, invented the phrase "Machine Learning" in 1959.

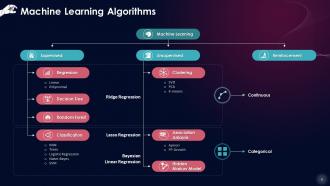

Slide 6

This slide lists that Machine Learning algorithms are capable of self-improvement through training. Currently, three prominent strategies are used to train ML algorithms: Supervised learning, Unsupervised learning, and Reinforcement learning.

Slide 7

This slide gives an overview of supervised learning which is one of the most basic types of Machine Learning. In this case, the Machine Learning algorithm is trained on labeled data. Even though the data must be appropriately marked for this method to work, supervised learning is powerful when utilized in the right situations.

Slide 8

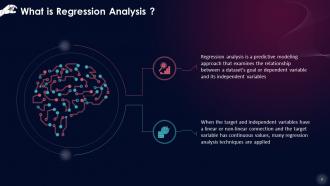

This slide demonstrates that regression analysis is the fundamental approach used in Machine Learning to address regression issues, using data modeling. It entails establishing the best fit line, which goes through all data points while minimizing the distance between the bar and each data point. The regression approach is mainly used to identify predictor strength, forecast trend, time series, and in the event of a cause-and-effect relationship.

Slide 9

This slide describes that linear and logistic regression are two regression analysis approaches used to address problems using Machine Learning; these are the most popular regression approaches. However, there are many types of regression analysis approaches in Machine Learning, and their use varies depending on the nature of the data.

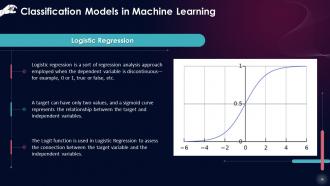

Slide 10

This slide states that regression analysis has many types, and the application of each approach is dependent on the number of components. These variables include the kind of target variable, the form of the regression line, and the number of independent variables.

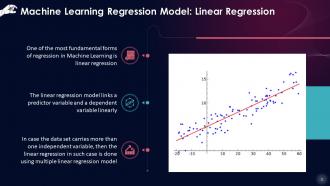

Slide 11

This slide describes that linear regression is one of the most fundamental forms of regression in Machine Learning. The linear regression model links a predictor variable and a dependent variable linearly.

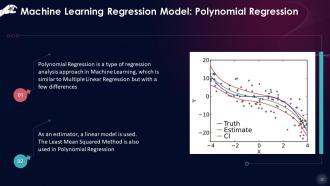

Slide 12

This slide states that Polynomial Regression is a type of regression analysis approach in Machine Learning. It is similar to Multiple Linear Regression but with a few differences. As an estimator, a linear model is used. The Least Mean Squared Method is also used in Polynomial Regression.

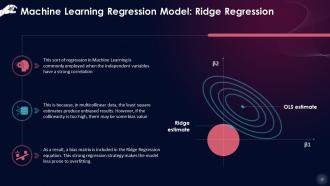

Slide 13

This slide states that this sort of regression in Machine Learning is employed when the independent variables have a strong correlation. This is because, in multicollinear data, the least square estimates produce unbiased results. However, if the collinearity is too high, there may be some bias value.

Slide 14

This slide lists that lasso regression is a sort of regression in Machine Learning that includes regularization and feature selection. It forbids the regression coefficients’ absolute size; and as a result, the coefficient value approaches 0, which is not the case with Ridge Regression.

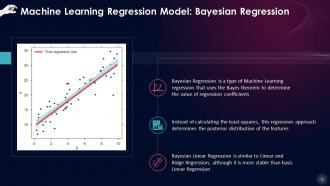

Slide 15

This slide showcases that Bayesian Regression is a type of Machine Learning regression that uses the Bayes Theorem to determine the value of regression coefficients. Instead of calculating the least-squares, this regression approach determines the posterior distribution of the features.

Slide 16

This slide states that decision trees are a handy tool and has many applications. Decision trees can be used to solve classification and regression issues. The name indicates that it displays the predictions coming from a series of feature-based splits using a flowchart-like tree structure. It all starts with a root node and ends with a leaf choice.

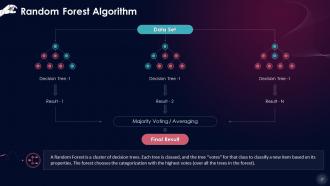

Slide 17

This slide gives an overview of random forest algorithm. A Random Forest is a cluster of decision trees. Each tree is classed, and the tree "votes" for that class to classify a new item based on its properties. The forest chooses the categorization with the highest number of votes (over all the trees in the forest).

Slide 18

This slide gives an overview of logistic regression which is a sort of regression analysis approach employed when the dependent variable is discontinuous: For example, 0 or 1, true or false, and so on. The Logit function is used in Logistic Regression to assess the connection between the target variable and the independent variables.

Slide 19

This slide demonstrates that KNN is a simple algorithm that keeps all existing instances, and classifies new cases based on a majority vote of its k neighbors.

Instructor’s Notes:

KNN may be understood with an analogy from real life. For example, if you want to learn more about someone, chat with their friends and coworkers.

Consider the following before settling on the K Nearest Neighbors Algorithm:

- KNN is costly to compute & arrive at

- Variables should be normalized, or greater range variables will cause the algorithm to be biased

- Data must still be pre-processed

Slide 20

This slide states that Naive Bayes is a probabilistic Machine Learning technique based on the Bayes Theorem and is used for a wide range of classification problems. A Naive Bayesian model is straightforward to build and works well with massive datasets. It is simple to use and outperforms even the most sophisticated classification algorithms.

Slide 21

This slide showcases that the SVM algorithm is a classification process in which raw data is shown as points in an n-dimensional space (n being the number of features you have). The value of each characteristic is then assigned to a specific location, making it simple to categorize the data. Classifier lines can divide data and plot it on a graph.

Slide 23

This slide showcases that Unsupervised Machine Learning has the benefit of working with unlabeled data. This implies that no human labor is necessary to make the dataset machine-readable, implying that the software to work on much bigger datasets.

Slide 24

This slide introduces clustering in Machine Learning which categorizes a population or sets data points into clusters so that data points in a similar group are more identical to one another and dissimilar from data points in other groups. It is a classification of objects based on their similarities and dissimilarities.

Slide 25

This slide lists that there are a variety of Clustering techniques available. The following are the most common clustering approaches used in Machine Learning: Partitioning Clustering, Density-Based Clustering, Distribution Model-Based Clustering, Hierarchical Clustering and Fuzzy Clustering.

Slide 26

This slide illustrates that the data is divided into non-hierarchical groups in Partitioning Clustering or Centroid-Based technique. The K-Means Clustering technique is a well-known example. The dataset is partitioned into K groups, where K denotes the number of pre-defined groups. The cluster center is designed in such a way that the distance between the data points of one cluster and the centroid of another cluster is as little as possible.

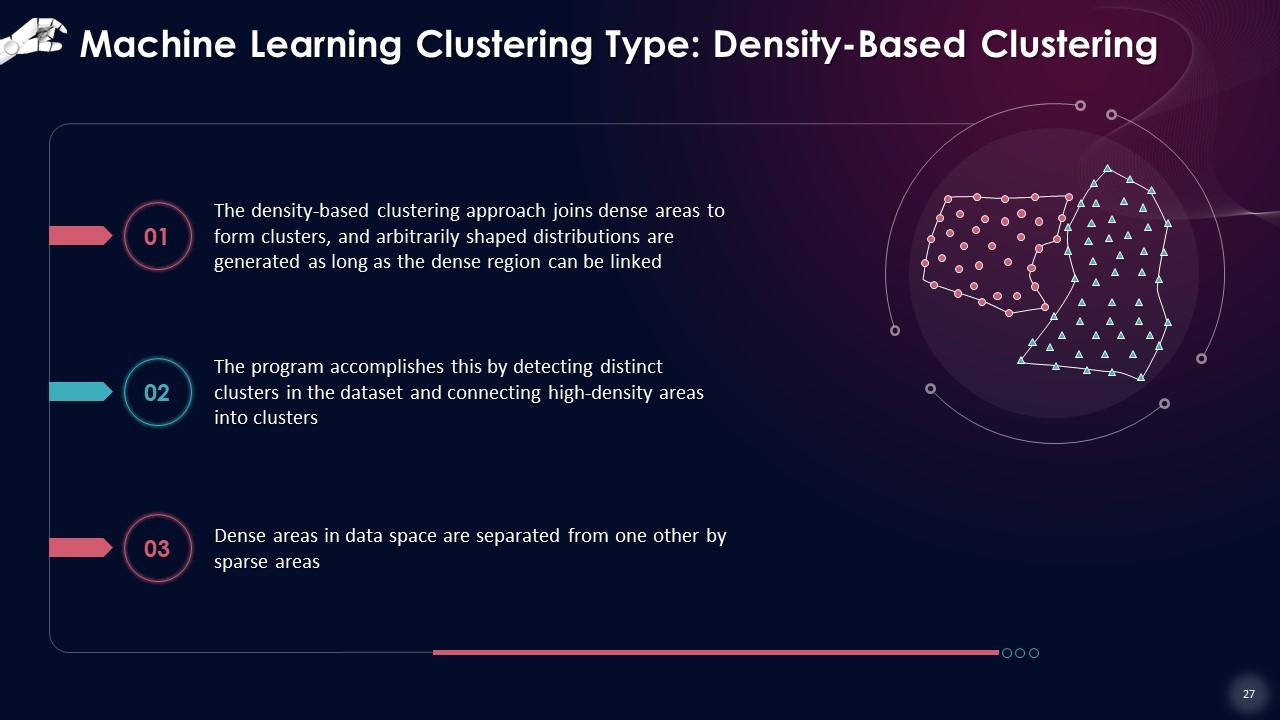

Slide 27

This slide states that the density-based clustering approach joins dense areas to form clusters, and arbitrarily shaped distributions are generated as long as the dense region can be linked. The program accomplishes this by detecting distinct clusters in the dataset and connecting high-density areas into clusters.

Instructor Notes: If the dataset has high density and multiple dimensions, these algorithms may struggle to cluster the data points.

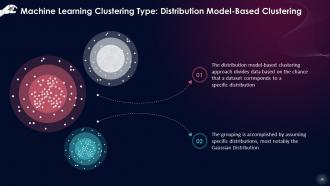

Slide 28

This slide explains that the distribution model-based clustering approach divides data based on the chance that a dataset corresponds to a specific distribution. The grouping is accomplished by assuming specific distributions, most notably the Gaussian Distribution.

Instructor Notes: The Expectation-Maximization Clustering method, which employs Gaussian Mixture Models, is an example of this kind (GMM) of clustering.

Slide 29

This slide showcases that as an alternative to partitioned clustering, Hierarchical Clustering can be used as there is no requirement to list the number of clusters to be formed. The dataset is separated into clusters to form a tree-like structure known as a dendrogram.

Slide 30

This slide states that Fuzzy Clustering is a soft technique in which a data object can be assigned to more than one group called clusters. Every dataset has a collection of membership coefficients proportional to the degree of membership of a cluster.

Slide 31

This slide gives an overview of clustering algorithm which is an unsupervised approach in which the input is not labeled. Issue solving relies on the algorithm's expertise gained by solving similar problems during training schedule.

Instructor Notes:

- Clustering methods can be categorized based on the models described above. Many clustering methods have been described, but only a few are widely used

- The type of data being used determines the clustering algorithm. Some algorithms, for example, must guess the number of clusters in a given dataset, whereas others must discover the shortest distance between the dataset observations

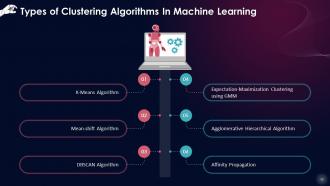

Slide 32

This slide lists the different types of clustering algorithms in unsupervised Machine Learning. These include K-Means, mean-shift, DBSCAN, expectation-maximization clustering using GMM, agglomerative hierarchical algorithm, and affinity propagation.

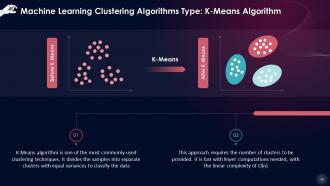

Slide 33

This slide gives an overview of K-Means clustering algorithm which is an unsupervised approach in which the samples are divided into separate clusters with equal variances to classify the data.

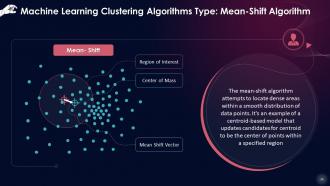

Slide 34

This slide introduces the mean-shift algorithm, which attempts to locate dense areas within a smooth distribution of data points. It's an example of a centroid-based model that updates candidates for centroid to be the center of points within a specified region.

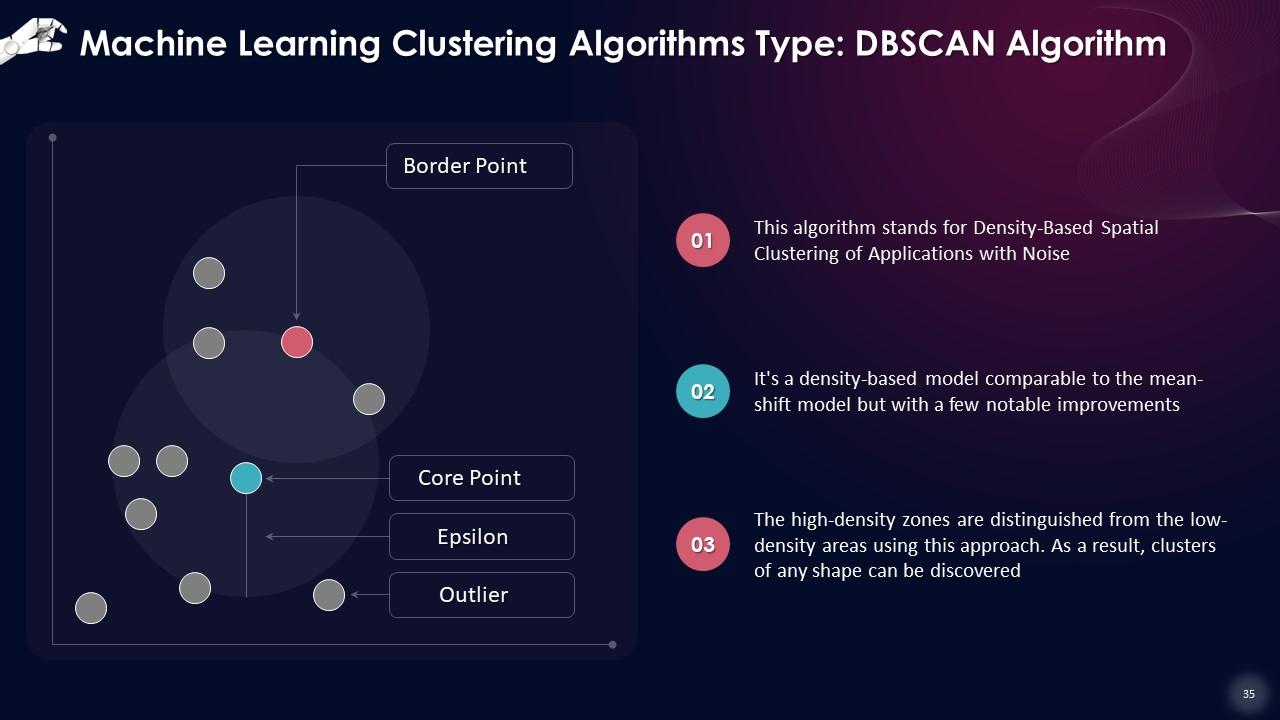

Slide 35

This slide states that DBSCAN algorithm stands for Density-Based Spatial Clustering of Applications with Noise. It's a density-based model comparable to the mean-shift model but with a few notable improvements. The high-density zones are distinguished from the low-density areas using this approach.

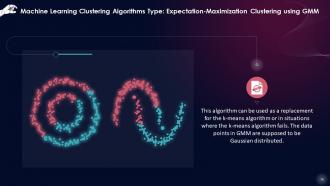

Slide 36

This slide showcases that Expectation-Maximization Clustering using GMM algorithm can be used as a replacement for the k-means algorithm or in situations when the k-means algorithm fails. The data points in GMM are supposed to be Gaussian distributed.

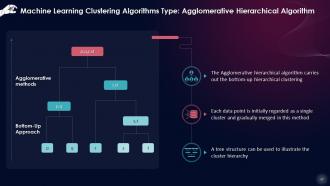

Slide 37

This slide lists that Agglomerative hierarchical algorithm carries out the bottom-up hierarchical clustering. Each data point is initially regarded as a single cluster and then gradually merged in this method.

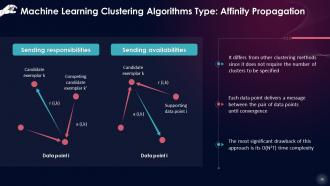

Slide 38

This slide gives an overview of affinity propagation which is different from other clustering methods since it does not require the number of clusters to be specified. Each data point delivers a message between the pair of data points until convergence.

Slide 39

This slide lists a few well-known uses of the clustering approach in Machine Learning, such as: In the detection of cancer cells, search engines, segmentation of customers, biological sciences, and land use.

Instructor Notes:

- In the detection of cancer cells: Clustering techniques are commonly employed to identify malignant cells. It separates the carcinogenic and non-cancerous data sets into distinct categories

- Used in search engines: Search engines also use the clustering approach. The search result is based on the object closest to the search query. It accomplishes this by combining related data objects into a single group separated from the other dissimilar things. The quality of the clustering method used determines the accuracy of results of a query

- Segmentation of customers: It is used in market research and social media to segregate customers based on their interests and choices

- In biological sciences: It is used in biology to classify plant and animal species using the image recognition approach

- In land use: The clustering approach is employed in the GIS database to discover areas of similar land use. This can be advantageous in determining what function a specific land parcel should be used for

Slide 40

This slide introduces association rule learning which is a form of unsupervised learning approach that detects the reliance of one data item on another and maps these appropriately for superior insights on data.

Instructor Notes:

Association rule learning algorithms are classified into three types:

- Apriori

- Eclat

- F-P Growth Algorithm

Slide 41

This slide states that Apriori algorithm is used for market basket analysis and assists in comprehending the items that may be purchased together. It may also be used in the medical profession to identify reactions to drugs in patients.

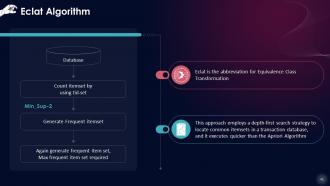

Slide 42

This slide lists that Eclat means Equivalence Class Transformation algorithm. This approach employs a depth-first search strategy to locate common itemsets in a transaction database, and it executes quicker than the Apriori Algorithm.

Slide 43

This slide explains the F-P Growth algorithm which stands for Frequent Pattern. The F-P growth algorithm is a more advanced version of the Apriori Algorithm. It depicts the database as a tree structure known as a frequent pattern or tree. The goal of this frequent tree is to find the most common patterns.

Slide 44

This slide lists that association rule learning has many uses in Machine Learning. Some common uses of association rule learning are listed below.

Instructor Notes:

- Analysis of the Market Basket: It is a well-known example and application of association rule learning. Large merchants often use this method to discover the relationship between goods

- Diagnosis Medical: Patients can be readily diagnosed with the use of association rules since it aids in determining the likelihood of sickness for a specific ailment

- Sequence of Proteins: The association rules aid in the creation of synthetic proteins

- It is also utilized in catalog design, loss-leader analysis, and various other applications

Slide 45

This slide gives an overview of Hidden Markov Model. It is a statistical model used in Machine Learning, and it can explain the evolution of observable events influenced by internal variables that are not apparent or visible.

Slide 46

This slide gives an introduction of reinforcement learning which is inspired directly by how humans learn from data in their daily lives. It includes an algorithm that uses trial-and-error to better itself and learn from new scenarios. Favorable outcomes are promoted or 'reinforced,' whereas unfavorable results are discouraged.

Instructor’s Notes: Reinforcement learning functions by placing the algorithm in a work environment with an interpreter and a reward system based on the psychological concept of conditioning. Each iteration of the algorithm delivers the output result to the interpreter, who assesses whether or not the outcome is favorable

Slide 48

This slide describes the importance of Machine Learning as it provides organizations with insight into trends in consumer behavior and operational business patterns and assists in the development of new products. Many major organizations, like Facebook, Google, and Uber, have made Machine Learning a core aspect of their operations.

Slide 49

This slide lists seven key steps that make the goal of embedding computers with intelligence relatively simple starting from data collection to making predictions.

Slide 50

This slide describes the first step of Machine Learning: Data Collection. Machines begin by learning from the data that you provide them. It is critical to obtain reliable data to identify the correct patterns for your Machine Learning model. Be sure to use data from a reputable source, as this will directly impact your model's output. Good data is meaningful, has few missing or duplicate numbers, and accurately represents the subcategories/classes available.

Slide 51

This slide lists the major points involved in Data Preparation. Starting with putting all of your data together and randomizing it, ensuring that data is dispersed uniformly and that the ordering does not interfere with the learning process. Separating the cleansed data into two sets, one for training and one for testing. The training set is the set from which your model learns, and a testing set is used to assess the correctness of your model after it has been trained.

Slide 52

This slide showcases that a Machine Learning model results from executing a Machine Learning algorithm on the acquired data. It is critical to select a model that is appropriate to the work at hand. Scientists and engineers have created models that are suitable for tasks such as speech recognition, picture recognition, prediction, and so on.

Slide 53

This slide lists that the most critical phase in Machine Learning is training. During training, you feed the prepared data to your Machine Learning model, which searches for patterns and makes predictions.

Slide 54

This slide gives an overview of model evaluation. After you've trained your model, you'll want to see how it's doing. This is accomplished by assessing the model's performance with unknown data. If testing is done on the same data used for training, you will get a disproportionately high level of precision. The program, in this case, is already familiar with data and sees the same patterns as it did previously.

Slide 55

This slide lists the steps involved in parameter tuning. After you've constructed and tested your model, check if you can enhance its accuracy This is accomplished by fine-tuning the parameters in your model. Parameters are the variables in the model that are usually determined by the programmer. The accuracy will be highest at a particular parameter value, and finding these settings is referred to as parameter tweaking.

Slide 56

This slide gives an overview of prediction. Making prediction refers to the result of an algorithm after it has been trained on a previous dataset and applied to new data while anticipating the likelihood of a particular outcome, such as whether or not a customer would churn in 30 days.

Slide 57

This slide lists advantages of Machine Learning: Trends and patterns are easily identified; no human interaction is required (automation); continuous enhancement; handling multidimensional & diverse data and new applications are always in the works.

Slide 58

This slide states disadvantages of Machine Learning: Data collection, times and resources, interpretation of results and high vulnerability to errors.

Instructor Notes:

- Data collection: Machine Learning demands extensive data sets to train on, which must be inclusive/unbiased and of high quality. They may also need to wait for new data to be created at times

- Time and resources: ML require adequate time to let the algorithms learn and evolve sufficiently to accomplish their purpose with high precision and relevance. It also demands many resources to function, and this may need additional computing power requirements

- The interpretation of results: Another significant problem is the capacity to appropriately understand the outcomes of the algorithms. You need to be very selective in choosing the algorithms for your application

- High vulnerability to errors: Machine Learning is self-sufficient, and is thus, prone to mistakes. Assume you train an algorithm using data sets that are too tiny to be inclusive. As a result of a biased training set, you have biased predictions. As a result, buyers are exposed to irrelevant advertisements. In Machine Learning, such errors can trigger a cascade of mistakes that can go undiscovered for extended periods. When these are detected, it takes a long time to identify the root cause of the problem and much longer to set things right.

Slide 59

This slide lists the five domains envisioned for future Machine Learning improvements.

Instructor Notes:

- Web Engine Search Results That Are Accurate: While scrolling through Google, searching for an article, one may be unaware that those results' ranking and hierarchical order are done on purpose. Recently, Machine Learning techniques have significantly influenced search engine results. Future search engines will be substantially more helpful at generating responses that are remarkably relevant to online searchers as neural networks continue to expand and evolve with emerging deep learning techniques

- Precision Tailor-made customization: Corporations might use Machine Learning to fine-tune their understanding of their target audience to impact the enhancement of existing goods, new product creation, merchandising, and garnering increased gross revenue. With additional developments and discoveries in the dynamic field of Machine Learning and its algorithms, we will begin to witness precise targeting and fine-tuned customization for customers at scale

- Increase in Quantum Computing: Quantum computing is expected to play a significant role in the future of Machine Learning. As we see quick processing, rapid learning, increased capacities, and enhanced capabilities, the incorporation of quantum computing into Machine Learning would transform the area. This means that complex challenges that we may not be able to address using traditional approaches and present technology may be resolved may be resolved in a fraction of a second.

- Data Units are Increasing at a Rapid Pace: Further advances in Machine Learning are expected to improve data units' day-to-day operations and help them achieve their goals more efficiently. Machine learning will be one of the foundational ways for generating, sustaining, and developing digital applications in the future decades. It indicates that data curators and technology developers spend less time programming and updating ML approaches and instead focus on understanding and continually improving their processes

- Self-Learning System that is Fully Automated: Machine learning will be simply another element in software engineering. In addition to standardizing how individuals build Machine Learning algorithms, open-source frameworks like Keras, PyTorch, and Tensorflow have removed the fundamental constraints. Some of this may seem like utopia, but with so much technology, information, and resources available online, these sorts of ecosystems are slowly but surely emerging. This would result in surroundings with near-zero coding, and the emergence of a fully automated systems

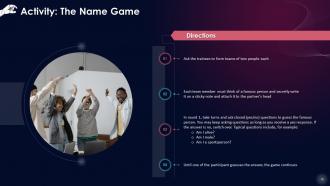

Slide 74 to 89

These slides contain energizer activities to engage the audience of the training session.

Slide 90 to 117

These slides contain a training proposal covering what the company providing corporate training can accomplish for the client.

Unveiling The Core Concepts Of Machine Learning Training Ppt with all 122 slides:

Use our Unveiling The Core Concepts Of Machine Learning Training Ppt to effectively help you save your valuable time. They are readymade to fit into any presentation structure.

-

The slides are remarkable with creative designs and interesting information. I am pleased to see how functional and adaptive the design is. Would highly recommend this purchase!

-

“I required a slide for a board meeting and was so satisfied with the final result !! So much went into designing the slide and the communication was amazing! Can’t wait to have my next slide!”